I’ve spent years reviewing and testing all kinds of graphics cards, from mainstream GPUs to powerful, top-tier models that challenge the limits of performance. Yet the RTX 5090 Founders Edition feels like a significant leap forward for creators, gamers, and anyone diving deep into AI or 3D rendering.

It’s not simply about raw power—though there’s plenty of it—but also about how it handles complex media formats, high-end video tasks, and emerging AI workloads. In this comprehensive post, I’ll walk you through the technical details, real-world performance, and practical considerations so you can decide if this powerhouse of a GPU deserves a spot in your setup.

From the outset, the RTX 5090 Founders Edition is positioned as the fastest and most powerful dual-slot GPU currently on the market. It draws attention not just for its raw specs—like the new GDDR7 memory, an increased count of CUDA cores, or its advanced TSMC 4nm process node—but also for the specialized improvements in AI processing, media encoding/decoding, and multi-stream 4K or 8K editing.

If you’re working with large video files, complex rendering tasks, or local AI model training, you might find it worth every watt and penny. But if you’re doing more casual gaming or light editing, is it still worth the jump from your current GPU?

Let’s jump right in. Below, I’ll explore everything from the GPU’s architectural foundation to how it performs under real workloads like DaVinci Resolve, Blender, Lightroom Classic, and more.

This is a long one, so buckle up: we’ll go through benchmarks, power consumption, pros and cons, and finally, help you figure out if it’s time to upgrade.

What Makes the RTX 5090 Founders Edition So Special?

I like to look beyond the buzz and examine what truly makes a GPU stand out. The RTX 5090 Founders Edition is marketed as the “fastest and most powerful” in its class—and after spending serious time examining it, I get where the hype comes from.

Right now, it seems that NVIDIA has honed in on a specific combination of raw horsepower, AI-oriented architecture, and robust media capabilities. If you’re a Creator—especially someone who handles intense video workloads or AI tasks—you’ll probably feel right at home with this GPU.

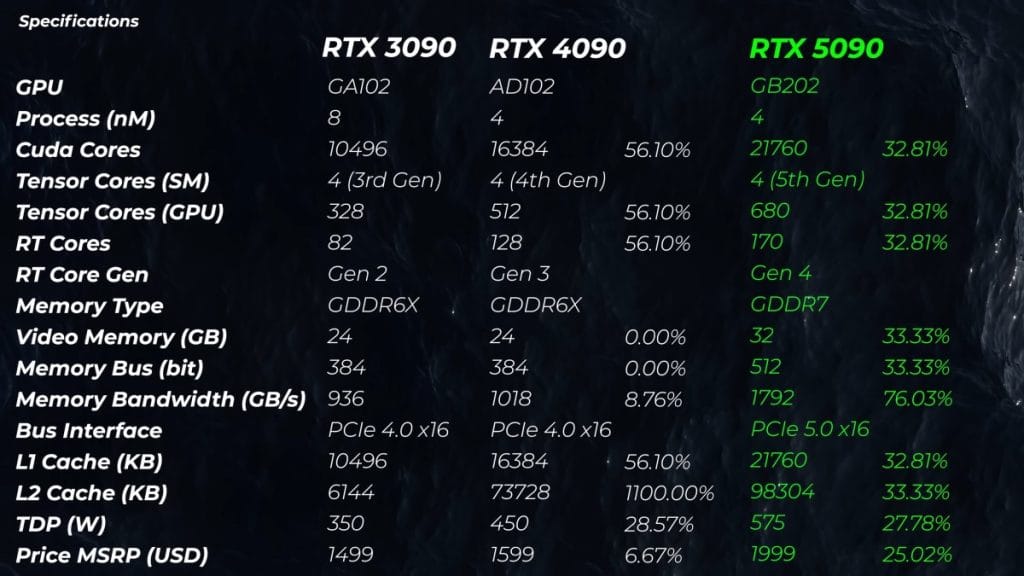

However, every big product launch comes with some caveats and considerations. While the jump from RTX 3090 to 4090 was enormous, some users might find the generational leap from 4090 to 5090 a bit more incremental—at least at first glance.

Yet once you see how it handles tasks like 4:2:2 video decoding, 8K playback, or local generative AI, you begin to understand why many consider it a “revolutionary” addition to NVIDIA’s lineup.

A Quick Overview of the Technical Specs

The RTX 5090’s headline specs are enough to catch any power user’s attention. Based on TSMC’s 4nm process node (N4), the GPU packs more transistors into a smaller space, offering improved efficiency and performance potential.

You’ll also find a sizable increase in CUDA cores—around 32% more than the RTX 4090. While not as large a jump as going from the 3090 (Samsung’s 8nm) to the 4090 (4nm), it still carries significant weight in real-world tasks.

Below are the major highlights of this card that I think you should keep in mind as you read on:

- Process Node: TSMC 4nm, offering higher transistor density and lower power leakage.

- Memory Upgrade: Moves from GDDR6X to GDDR7, with a robust 512-bit memory interface.

- VRAM Capacity: 32GB, which is 8GB more than the RTX 4090 and 12GB more than the RTX 3090.

- Memory Bandwidth: Up to 1792 GB/s, shattering previous throughput benchmarks.

- AI and Ray Tracing Cores: Refined Tensor and RT cores for next-level DLSS performance, real-time ray tracing, and AI computing.

- PCIe 5.0 x16: Ideal for next-gen CPU platforms, delivering high data transfer rates for bandwidth-heavy tasks.

- Power Consumption: Official figures suggest around 575W under heavy load, with potential overclocking up to 600W or more.

- Cooling Design: Despite drawing significant power, it retains a dual-slot form factor with advanced cooling solutions in the Founders Edition.

I’ll be diving deeper into each of these points, but this list should give you an idea of the raw capabilities. If you’re already concerned about power draw or case space, we’ll cover that in more detail later on.

Architecture Deep Dive: Node Differences and L2 Cache Growth

A big part of the RTX 5090’s story is its fabrication node and how NVIDIA leveraged TSMC’s 4nm process. Previously, the 3090 used an 8nm Samsung node, making the jump to 4nm on the 4090 a huge leap. Now that the 5090 shares that 4nm foundation, the architecture focuses on refinements and expansions rather than an entirely new process shrink.

For instance, the L2 cache size—so important for quick data access—grew by about 32–33% compared to the RTX 4090. That difference can be significant in real-world rendering scenarios where a large chunk of data needs to be accessed rapidly.

On top of that, you still get crucial enhancements to tensor cores (for AI tasks) and RT cores (for ray-traced graphics or 3D modeling scenes that rely heavily on advanced lighting algorithms).

This architectural approach means that while the base clock improvements might not be astronomical, the card benefits from better parallelization and memory bandwidth. Combined, these factors help deliver that extra edge in specialized tasks.

Benchmarking Methodologies: How I Tested the Card

When evaluating a GPU of this caliber, I like to test it with a range of applications and benchmarks that capture both synthetic performance and real-world tasks.

To keep things consistent, I used a high-performance system built around an Intel Core i9 CPU, 64GB of DDR4 memory, a reliable 1200W power supply, and a fast NVMe SSD for my primary storage.

Admittedly, using DDR4 does introduce a slight CPU bottleneck, especially since the RTX 5090 thrives with the newest platforms that can fully leverage PCIe 5.0. But the system still provides a robust test environment for comparing results across different GPUs, including the RTX 3090, 4090, and an AMD Radeon RX 7900 XTX. Benchmarks included:

- Geekbench 6 (OpenCL and Vulkan): Offers a quick performance baseline for computational tasks.

- DaVinci Resolve: Highlights GPU acceleration in video editing, focusing on both encoding and playback of complex codecs.

- Premiere Pro & After Effects: Tests advanced effects, exports, and GPU acceleration for various video workflows.

- Lightroom Classic & Photoshop: Evaluates GPU-based filters, exporting, and AI-driven features like object selection and content-aware fill.

- Blender & V-Ray: Checks pure 3D rendering performance in real-world scenes.

- FurMark: Useful for maximum power draw measurements and thermal stress testing.

In each test, I took careful note of peak power usage, thermal behavior, and any driver-related quirks—especially since brand-new cards often require software patches to unlock their full potential in specific tasks. Below, you’ll see how this data translates into everyday performance gains or, in some cases, only modest improvements.

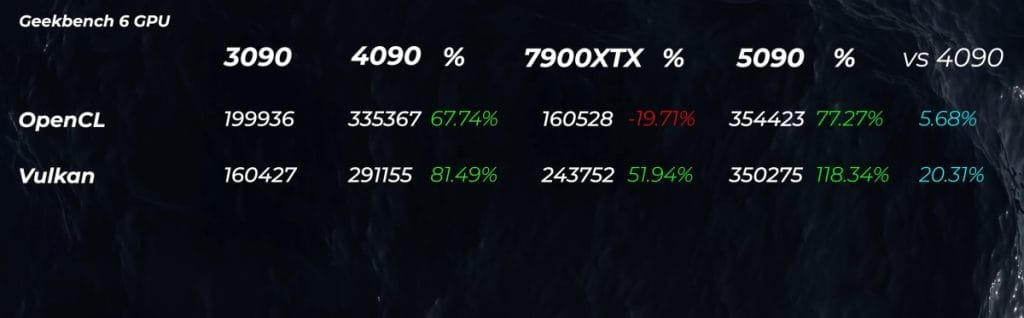

Geekbench Insights: OpenCL and Vulkan Performance

Geekbench 6 helps gauge raw compute performance in both OpenCL and Vulkan contexts. Compared to an RTX 3090, the RTX 5090 shows about a 77% uplift in OpenCL and more than double the performance in Vulkan tests. Even up against the mighty RTX 4090, you might see anywhere from 5% to 20% gains, depending on which part of the benchmark is being stressed.

If you’re sitting on a 3090 or another GPU from that generation, upgrading to a 5090 could feel like night and day in certain compute-heavy workflows. For 4090 owners, the performance bump may be less jaw-dropping but still noticeable—particularly if you’re running GPU-bound tasks that leverage Vulkan or advanced compute capabilities.

OpenCL vs. Vulkan: Why It Matters

Not everyone cares about these APIs, but they do factor into many creative applications. OpenCL is widely adopted in software like Photoshop and DaVinci Resolve for GPU acceleration.

Vulkan is increasingly used in modern gaming engines and some professional tools for its low-overhead, high-performance approach. Seeing improvements in both suggests well-rounded performance gains, whether you’re editing, rendering, or gaming.

Photo Editing: Photoshop and Lightroom Classic

Most people don’t associate top-tier GPUs with photo editing, but Adobe has been ramping up GPU acceleration. Complex filters, AI-based object selection, and even some exporting tasks can now rely on GPU power to speed things up.

The RTX 5090 excels in these areas, showcasing up to a 54% faster performance compared to the RTX 3090 in overall Photoshop scores. If you rely heavily on large PSD files or advanced AI features, that difference is big enough to shave noticeable time off your workflow.

Lightroom Classic, on the other hand, can behave unpredictably with brand-new GPUs, especially if the drivers are still maturing. In some tests, older GPUs might keep pace in certain tasks, and that can be due to software not fully recognizing or utilizing new GPU features.

Over time, driver updates and Adobe patches typically close these gaps. I expect the 5090’s advantages to become more pronounced as Lightroom Classic and other Adobe products optimize their GPU acceleration for the latest hardware.

Video Editing Capabilities: A Look into the Media Engines

Video editors dealing with high-res footage at high bitrates need more than just a strong CPU. In many workflows, the GPU’s specialized encoders and decoders, known collectively as the “media engines,” can dramatically affect real-time playback, scrubbing, and export times.

The RTX 5090 includes three encoders and two decoders, supporting advanced formats like H.264 and H.265 (including 4:2:2 variations) that can bring lesser systems to their knees.

One particular highlight is how these media engines can handle multiple 4K streams. You’re looking at up to nine streams of 4K at 60 frames per second, or a similarly impressive throughput for 8K video.

If you frequently edit multi-cam footage, this horsepower can be game-changing. Scrubbing through multiple timelines often becomes seamless, even without generating proxies.

In my own testing with advanced editing setups, the 5090 rarely dropped frames, even when loaded with multiple 4K clips featuring heavy color grading. Where older GPUs or less capable encoders would struggle, the 5090 maintained smooth real-time playback. This means faster editing workflows and less time waiting on your timeline to catch up to your edits.

DaVinci Resolve: Capitalizing on GPU Acceleration

DaVinci Resolve is heavily optimized for GPU acceleration, especially when it comes to color grading and timeline playback of compressed codecs. The RTX 5090 can show a 126% gain over the RTX 3090 for certain long-GOP workloads. Even if you’ve been using a 4090, you might see a sizeable performance bump, particularly when layering multiple effects and transitions on top of your footage.

Overall export times were also impressive. Tasks that used to peg the CPU at 100% can now be offloaded to the GPU’s encoders, freeing up system resources for other parallel operations or smoothing out the editing process.

This is especially useful if you’re short on time or constantly multitasking. If DaVinci Resolve is your main NLE (non-linear editor), you’ll feel right at home with the 5090’s media engine support.

Premiere Pro and After Effects

Although software support at launch can be incomplete, Premiere Pro is expected to catch up quickly. Some initial benchmarks showed only modest gains over the 4090, but once the encoding and decoding features are fully utilized by Adobe’s updates, I anticipate faster exports and smoother real-time playback.

After Effects, known for its reliance on CPU for many tasks, can still benefit from GPU-accelerated effects and previews. The advantage is smaller compared to DaVinci Resolve, yet it’s there—particularly for effects relying on advanced shaders and 3D ray-traced compositions.

In the future, as these applications leverage the multi-encoder/multi-decoder design more aggressively, the 5090 may well become the go-to GPU for professional video editors who want the fastest possible turnaround times.

3D Rendering: Blender, V-Ray, and Beyond

While gamers look at frames per second, creators using Blender, V-Ray, or other 3D rendering engines focus on how quickly a GPU can produce final frames. The RTX 5090’s architecture shines here, delivering more CUDA cores, refined ray tracing, and enough VRAM to handle massive scenes without stuttering or memory overflows.

Blender Benchmarks and Real-World Performance

In Blender, the jump from the 3090 to the 4090 was already huge, often hitting nearly double the performance in some demanding scenes. The 5090 adds another 15–20% on top of what the 4090 could do, which can be a meaningful time saver if you’re constantly rendering high-polygon scenes or working with complex lighting. When every frame matters, that performance difference quickly translates into hours saved on large projects.

A few test scenes showcased two-and-a-half times the speed compared to the 3090. If you’re used to waiting on big renders, you’ll notice the difference immediately. Plus, if you do a lot of viewport rendering with real-time ray tracing and advanced shading, the extra horsepower feels smoother, letting you interact with your 3D scenes more intuitively.

V-Ray and RT Cores in Action

V-Ray is another popular benchmark for professional 3D work. The RTX 5090 can be up to 30–40% faster than a 4090, depending on the scene and whether CUDA cores or ray tracing cores are the primary bottleneck. You’ll notice these improvements most prominently when you’re pushing the limits of ray tracing, reflections, and advanced light bounces.

For studios that produce high-end animations or architectural visualizations, this can free up workstations to handle more tasks in parallel or to finish jobs on tighter deadlines. From my perspective, that additional speed is a welcome jump for those who rely on GPU rendering in their everyday pipeline.

The Significance of the 32GB VRAM

Another big selling point is the increased VRAM—32GB of GDDR7. In some 3D workflows, especially those involving 8K textures or vast, highly detailed environments, extra VRAM means fewer out-of-memory errors and a smoother process overall.

With large-scale GPU rendering, you’re often only as good as the memory available. Running out of VRAM leads to fallback to system RAM, crippling performance. The 5090 mitigates this risk, letting you tackle bigger scenes or higher-resolution textures without sacrificing speed.

AI Capabilities: Local LLMs, Generative Art, and More

I’m more excited than ever about the role of GPUs in the AI world, and the RTX 5090 stands out here. It’s not just about deep learning super sampling (DLSS) for gaming but also about the potential for local AI tasks.

If you’re exploring large language models (LLMs), generative art, or other machine learning workloads, the 5090’s tensor cores and high memory bandwidth give you room to experiment and train with bigger datasets.

Compared to the RTX 4090, you can expect up to 20–30% more raw compute in certain AI tasks. And if you’re coming from the 3090, the difference can be staggering—sometimes nearing a 2X jump in performance, depending on how heavily the software leans on tensor cores and memory throughput. AI inference tasks also scale nicely, meaning you can handle more streams or higher batch sizes without crippling your system.

Another niche but growing area is generative AI for video workflows—imagine auto re-coloring or AI-based upscaling of entire video files. The advanced hardware encoders and decoders, combined with powerful tensor cores, can speed up such tasks significantly. If you’ve been itching to explore next-generation AI features, the 5090 is well-suited for it.

Power Consumption, Heat, and Practical Concerns

Let’s talk about power draw. NVIDIA officially lists around 575W under high load, and I observed similar spikes when stress testing with FurMark and heavy GPU rendering. If you try to push it further with overclocking tools, you might reach closer to 600W.

Technically, the GPU can split that load across the cables and PCIe slot for a maximum approaching 675W—but that’s pushing it to the extremes.

The good news is the idle power consumption rests at about 20W, which is relatively efficient for such a robust card. Still, if you plan to run the GPU at its peak for extended rendering or AI sessions, heat output can get serious.

Ensure you have adequate cooling. The Founders Edition cooler is advanced, but you’ll still want solid airflow through your case, especially if you’re in a warm environment.

- Robust PSU: At least 1000W or 1200W is advisable, providing some headroom for other components.

- Case Ventilation: High static-pressure fans and unobstructed airflow channels are key to dumping heat efficiently.

- Monitoring Software: Keep track of GPU temperatures and clock speeds. If you see throttling, consider adjusting fan curves or your overall case setup.

- Long-Term Power Costs: Running 575–600W for hours can impact your electric bill, so weigh this if budget is a concern.

For short bursts of rendering or gaming, the brief spikes in power may not be as big of an issue. But professionals with nonstop production schedules should factor in the added energy costs and heat management.

Comparing 3090, 4090, and 5090: Which Upgrade Path Is Right for You?

The critical question: is the 5090 worth it for your specific needs? If you’re still on a 3090 or an older GPU, the jump can be transformative—particularly if you render in 3D, cut 4K or 8K videos, or need serious AI horsepower. The difference can feel like night and day, saving you hours or even days of rendering and export times over a large project cycle.

But what if you already have an RTX 4090? The jump is there, but it might not always be jaw-dropping in raw rasterized performance. You’ll see improvements of around 15–30% in certain workloads, more so if those tasks rely heavily on AI or advanced media encoding/decoding.

If you earn a living from faster renders or can’t stand waiting for large encodes, upgrading might pay off in the long run. Otherwise, you might find that a well-tuned 4090 remains sufficient for most workloads.

Real-World Observations and Nuances

Despite all the benchmarks, one area I always highlight is driver and software support. The RTX 5090, being cutting-edge, can run into situations where older applications or unoptimized software can’t leverage its full suite of features—yet. Over the next few months, driver releases and app updates typically boost new cards’ performance, smoothing out any early kinks.

For instance, certain versions of After Effects or Lightroom Classic might fail to see the full advantage of the 5090’s GPU acceleration because they haven’t been patched. Video editing software that doesn’t fully utilize the multi-encoder design could show only minor gains.

That said, once those updates roll in, this GPU’s headroom will likely become more evident. In the meantime, you still get to enjoy the raw horsepower, extra VRAM, and all-around advanced architecture.

Pros and Cons: A Final Verdict

Below, I’ll summarize the big wins and drawbacks I noticed. This quick reference should help you gauge if the RTX 5090 Founders Edition aligns with your goals and budget.

Pros

- Massive Performance: Delivers a significant jump for users of older GPUs, thanks to advanced CUDA cores, more VRAM, and higher memory bandwidth.

- Powerful AI and Media Engines: Ideal for heavy video workloads, multi-cam 4K/8K editing, or local LLM training.

- 32GB GDDR7 VRAM: Allows large scene handling in 3D or working with big video files without stuttering or memory overflows.

- Dual-Slot Founders Edition: Impressive cooling design that keeps thermals reasonable despite drawing up to 575W.

- High Memory Bandwidth: 1792 GB/s throughput ensures quick data transfer for rendering and AI-driven computations.

- Future-Proofing: PCIe 5.0 and advanced core design pave the way for next-gen workflows and software optimizations.

Cons

- Not a Huge Step from 4090 in All Areas: While improved, some tasks show only moderate gains over the 4090.

- High Power Usage: Can reach 575–600W, demanding robust cooling solutions and potentially raising electricity costs.

- Initial Software/Driver Support: Some applications may not fully exploit the GPU’s capabilities until future patches.

- Price Point: Premium cost could make it overkill for casual gamers or light content creators.

Whether the pros outweigh the cons depends on how heavily you push your system. If a 15–30% improvement can save you extensive render or encoding time, it might be worth every extra watt and dollar.

Who Should Upgrade and Who Can Wait?

I see three main categories of potential buyers. First, if you’re on a 3090 (or older) and do serious creative work—like 3D modeling, huge video projects, or AI tasks—the RTX 5090 can transform your workflow.

Second, if you rely heavily on advanced AI features, multi-encoder usage, or 4K/8K multi-cam editing, the 5090’s specialized media engines are a big deal. Third, if you’re purely gaming or doing modest editing, you might not see a big difference over a 4090, so weigh that cost carefully.

As for me, I’m deeply engaged in video production and AI-based explorations. The time savings and ability to handle multiple high-resolution streams without frame drops are game-changers.

Yes, it’s an investment, but if each render or export is significantly faster, it can pay for itself in productivity gains—especially in a professional environment where deadlines and bottom lines matter.

Final Thoughts: A Glimpse into the Future of GPUs

To wrap it up, the RTX 5090 Founders Edition cements NVIDIA’s commitment to marrying advanced AI capabilities with top-tier rendering and media performance. While its architectural improvements over the 4090 might seem incremental in some areas, the overall package—expanded VRAM, improved L2 cache, refined tensor cores, and enhanced media engines—makes it feel like a genuine step forward for anyone working at the cutting edge of technology.

Is it revolutionary enough to justify an upgrade if you’re happy with a 4090? Possibly, if your workflow is oriented around tasks that push hardware limits daily. If you’re on older hardware, the difference may well blow you away.

And if you’re a professional in an industry where time is money—be it video production, 3D modeling, or AI research—then the RTX 5090 could quickly become your most valuable asset.

Thanks for joining me on this deep dive. I hope it helps you decide if you’re ready to embrace the future of GPU technology or if you’d be better served waiting for software optimizations and potential price drops.

Either way, we’re witnessing an exciting era of graphics hardware, and the RTX 5090 is leading the charge into uncharted territory for creators, gamers, and AI enthusiasts alike.